|

henk-reints.nl

Example of the miserable performance of the JavaScript Array Object when it grows large.

Using my hr$dirtool package to scan directories,

I made the following example.

The script first scans the entire folder tree of my C: drive,

then sorts all files by size, and finally it writes the result to an output stream.

The script can be directed to store all information in a JavaScript Array in memory,

or to store this intermediate info in temporary files.

The result is below:

Without temporary file:

CommandPrompt> ddir /o=s c: /s /v /t >d:\temp\y.y

Scanning folder tree C:\ 88929 scanned, 88929 stored, elapsed: 982.052 sec

Sorting 88929 records 1348694 cmp's, elapsed: 1528.518 sec

Writing result (88929 lines) to output stream...

^C

Aborted after a total duration of ca. 1 hour.

Output write time was then approximately 3600 - 1528 - 982 = 1090 sec.

Output file size was by then 1.2 MB which is less than 7% of the expected final size of 18.3 MB.

Total write time would have been ca. 15000 sec = over 4 hours!

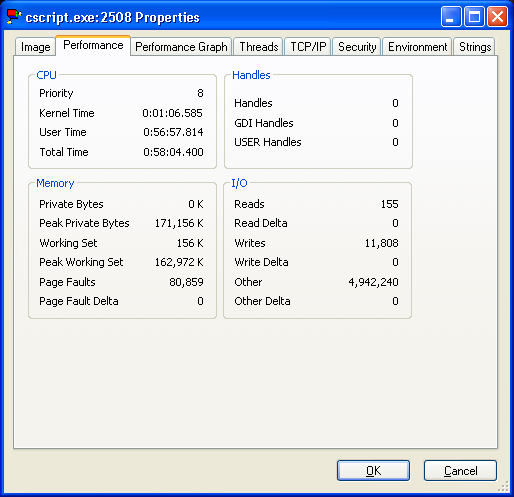

All time the script was using nearly 100% of CPU time.

Its memory usage had grown to over 160 MB.

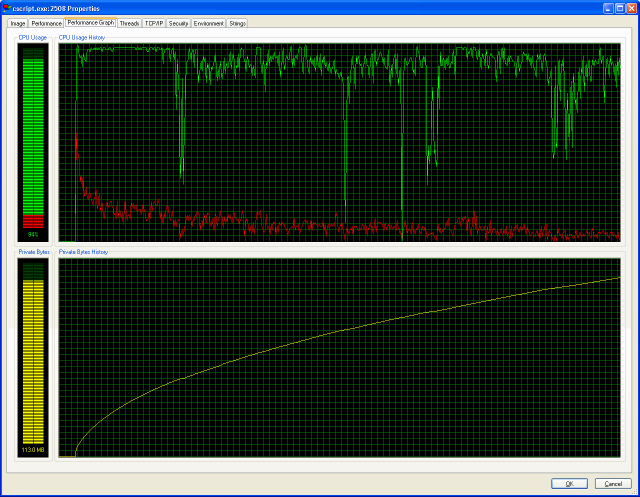

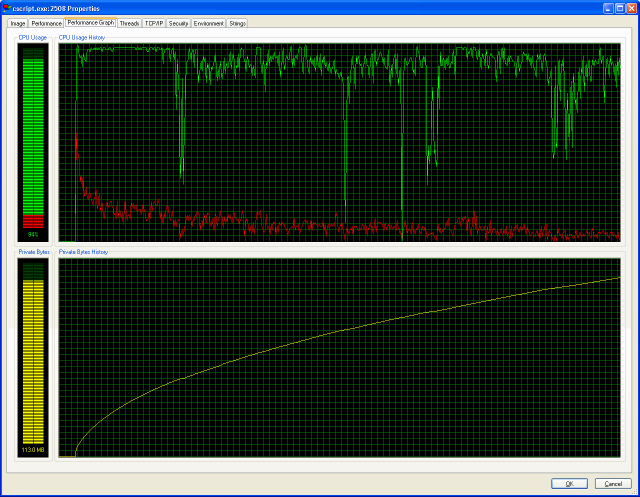

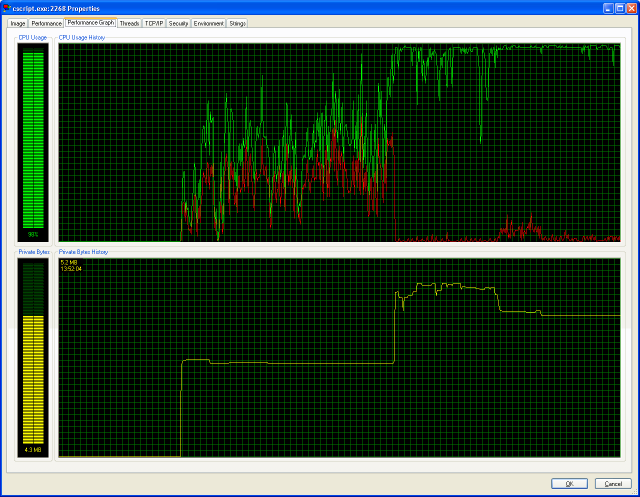

Performance graphs

(made with Process Explorer

from www.sysinternals.com):

As you see, building the Array during the file scan lets it grow to 150 MB in a curve that looks very much like a parabole.

This means addressing elements of the Array has quadratic behaviour!

It probably does a linear search from start to end every time...

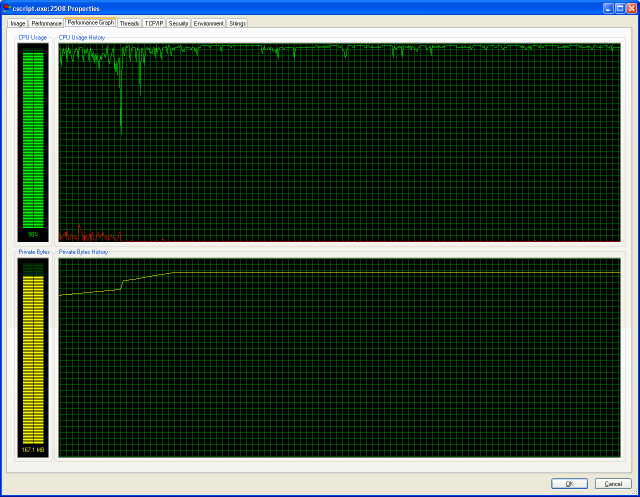

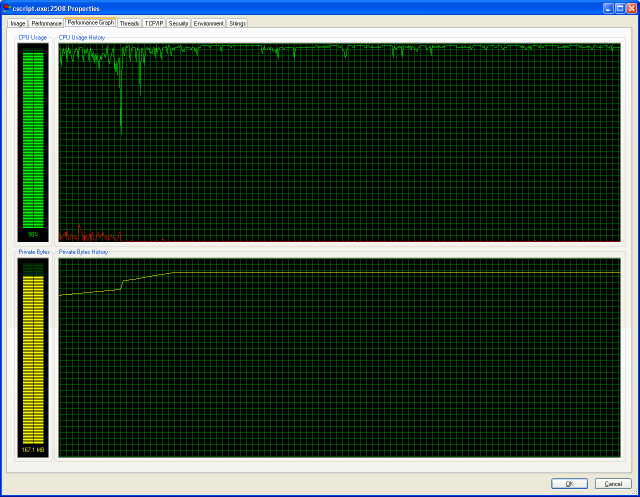

Below is the performance graph during sorting, for which I use my own fast sorting algorithm.

It creates an index Array for which it needs some extra memory, as you can see below:

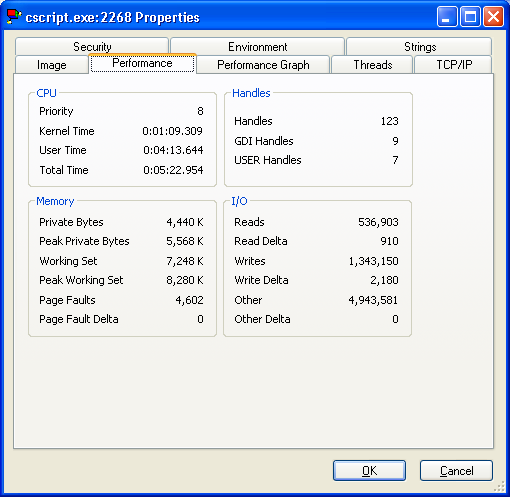

Performance summary:

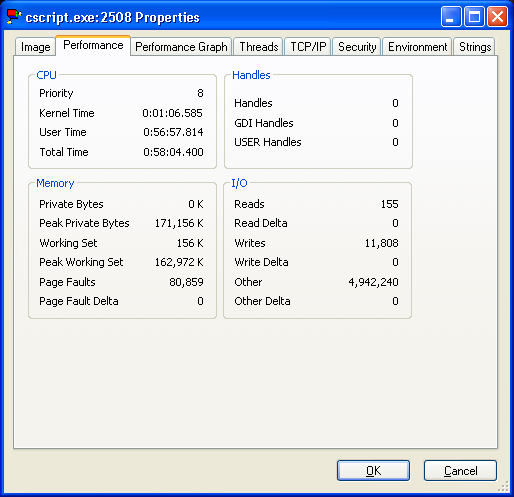

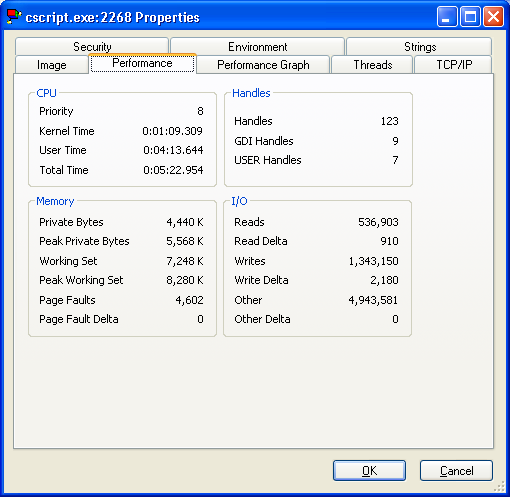

With a temporary file:

in this mode, the script used an Array of 4096 records for sorting chunks of the temporary file.

CommandPrompt> ddir /o=s c: /s /v >d:\temp\z.z

Using temp.file C:\DOCUME~1\reintsh\LOCALS~1\Temp\hr$dirtool20051229134710.tmp

Scanning folder tree C:\ 88943 scanned, 88943 stored, elapsed: 217.663 sec

Sorting 88943 records 1339733 cmp's, 42 tmp.files, elapsed: 148.564 sec

Writing result (88943 lines) to output stream...

Elapsed while writing result: 81.177 sec

Total elapsed: 447.414 sec

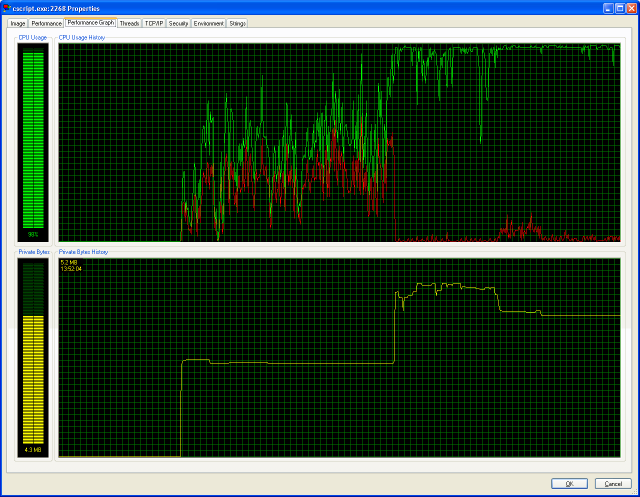

As you can see, it is still not a flashing speed miracle,

but it took only 7.5 minutes to scan the entire folder structure,

sort the nearly 90000 records, and write them nicely formatted to an output file.

It used much less CPU time, especially during the folder scan, and its memory peak was just 5.4 MB.

The size of the temporary file to store the intermediate information was ca. 11 MB.

MUCH BETTER than the above example using the large (88929 elements) JavaScript Array

which had grown to 150 MB for storing these 11 MB of data...

Performance graph:

Performance summary:

Conclusion:

JavaScript Arrays are not suitable for storing large amounts of data.

They waste memory and CPU time, and not even a rocket engine can give it any acceptable speed.

And in spite of this bad behaviour, I do like JavaScript as a language!

Just don't use Arrays for storing large amounts of data.

After all, JavaScript was not designed for handling large amounts of data, but to do nice little things in web pages.

henk-reints.nl

|